Watts vs Amps: Understanding the Difference

When it comes to electricity, understanding the basic units of measurement is crucial for safety and efficiency. Watts and amps are two fundamental units that describe electrical properties but represent different aspects of electricity.

In this article, we will delve into the definitions of watts and amps, explore their differences, and explain how they relate.

What Are Watts?

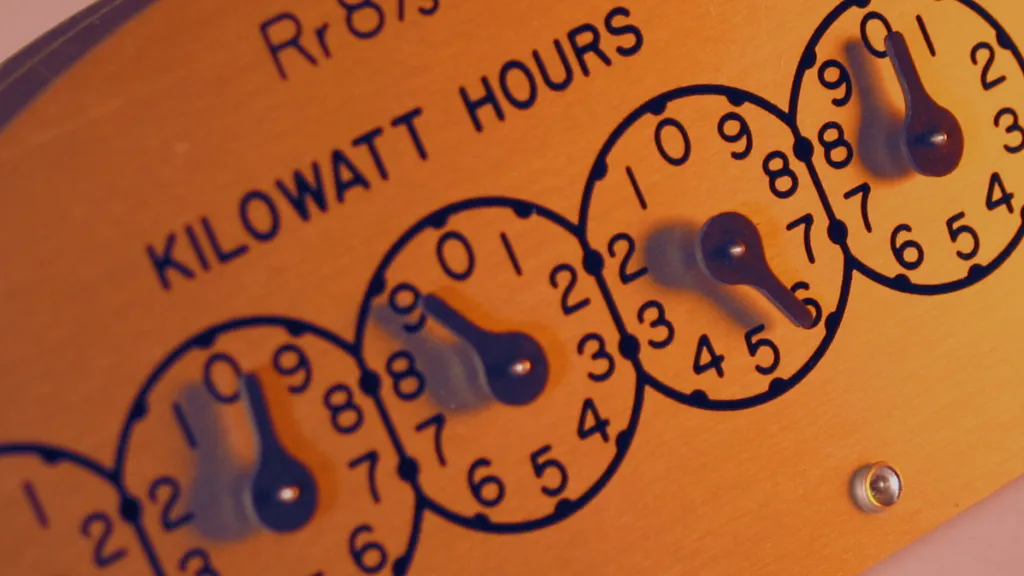

Watts (W) are the power units in the International System of Units (SI). Power refers to the rate at which energy is used or transferred.

In electrical terms, watts measure how much energy an electrical device consumes or generates per second. For example, a light bulb rated at 60 watts consumes 60 joules of energy every second it is turned on.

What Are Amps?

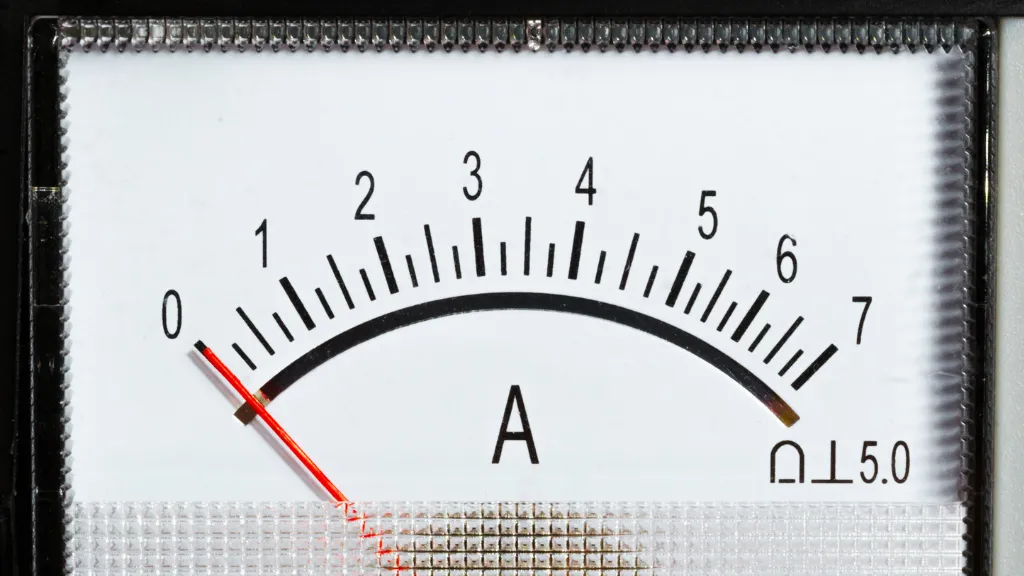

Amps, short for amperes (A), are the units of electrical current in the SI system. Electrical current is the flow of electric charge through a conductor, such as a wire.

The ampere measures the amount of charge passing through a point in the circuit per second. A higher amperage means more electric charge is moving through the circuit.

Difference Between Watts and Amps

The primary difference between watts and amps lies in what they measure: watts measure power, while amps measure current. Understanding this distinction is crucial when working with electrical devices, as it affects everything from energy consumption to the sizing of wires and safety equipment.

Practical Implications

Knowing the wattage and amperage can help you understand energy consumption and the electrical load the device will place on a circuit when choosing electrical appliances or equipment. For instance, a high-wattage appliance will consume more electricity and, therefore, be more expensive to operate than a lower-wattage one.

Meanwhile, an appliance with a high amperage rating might require a dedicated circuit to handle the higher current flow without tripping a breaker.

How Watts and Amps Relate to Each Other

The relationship between watts and amps is defined by the equation W = A x V, where W stands for watts, A stands for amps, and V stands for volts (the measure of electrical potential difference). This equation shows that the power (watts) in an electrical system is the product of the current (amps) and the voltage (volts).

Therefore, if you know two of these values, you can calculate the third.

Example Calculations

For example, if you have a device that uses 3 amps of current at 120 volts, the power consumption can be calculated as follows:

Power(W) = Current(A) × Voltage(V) = 3A × 120V = 360W

Can a device with a higher amperage draw more power even if it has the same wattage as another device?

This is an intriguing question that delves into the relationship between current, voltage, and power in electrical devices. Let’s break it down:

First, recall the basic relationship between power, current, and voltage, expressed by the equation P = IV (where P is power in watts, I is current in amps, and V is voltage in volts).

Now, suppose we have two devices, both rated at 100 watts of power. However, one device operates at a higher amperage than the other. Since the power is held constant at 100 watts for both devices, the device with the higher amperage must be using a lower voltage, because power is the product of current and voltage.

Here’s where it gets interesting: a device with a higher amperage does not necessarily draw more power; it simply means that it operates with more current flow. However, this higher current flow at a lower voltage (to maintain the same power level) can have implications for energy efficiency and electrical infrastructure.

For instance, higher current flow can lead to more heat generation in the device and the wiring, requiring thicker wires or more robust insulation to handle the increased thermal and electrical load.

Can You Charge a Device Faster by Increasing Amps or Watts?

One interesting question that arises from the discussion of watts and amps is whether you can charge a device faster by increasing amps or watts. The answer sheds light on how electrical devices, particularly rechargeable batteries, work.

Understanding Charging Speed

The charging speed of a device depends on both the power supply (measured in watts) and the current it can handle (measured in amps). Essentially, the faster charging occurs when more energy is delivered to the device’s battery over a shorter period.

Impact of Increasing Amps

Increasing the amperage (amps) of the charger can lead to faster charging times because it increases the flow rate of electrical charge to the battery. Most modern devices and chargers are designed to handle different amperage levels safely.

However, the device’s battery must be capable of accepting the higher current without overheating or getting damaged.

Impact of Increasing Watts

On the other hand, increasing the power (watts) of the charger, which is a product of voltage and current, can also result in faster charging. This is because a higher wattage means more energy is transferred to the battery per second.

However, this is only effective up to the maximum power input the device’s charging circuit is designed to handle.

While both increased amps and watts can potentially speed up charging times, the device’s specifications limit the effectiveness of these increases. Exceeding these specifications can lead to damage or safety hazards.

Therefore, it’s important to use chargers that match the device’s recommended settings. For the fastest and safest charging, use the charger that comes with the device or one that is specifically certified for that device.

Always ensure that any increase in amps or watts aligns with what your device can safely handle.

Conclusion

Understanding the difference between watts and amps and how they relate is essential for anyone dealing with electrical systems or appliances. This knowledge helps ensure safe and efficient electricity use by allowing for the proper selection and operation of electrical devices.

Remember, when dealing with electricity, always prioritize safety and consult a professional if unsure about your electrical setup.

Frequently Asked Questions

What happens if I use a device with higher watts than my outlet supports?

Using a device that consumes more power than what the outlet is rated for can lead to overheating, damage to the electrical system, or even fire.

Can I plug any device into any outlet?

Not always. You need to ensure that the device’s amperage and voltage requirements are compatible with what the outlet can provide.

How can I reduce my energy consumption?

Opting for energy-efficient appliances and turning off devices when not in use can significantly reduce energy consumption.

Author

Alex Klein is an electrical engineer with more than 15 years of expertise. He is the host of the Electro University YouTube channel, which has thousands of subscribers.